This is a complimentary blog post to a video from the ShopifyDevs YouTube channel. In this first part of a three-part series, Zameer Masjedee, a Solutions Engineer with Shopify Plus, will introduce some of the best practices for addressing rate limits by walking through a live example with a private app in a development store. Zameer will begin by defining what a rate limit is, and why it’s important to you. He will then introduce you to the “leaky bucket” algorithm Shopify uses, and how it benefits both merchants and partners alike.

Note: All images in this article are hyperlinked to the associated timestamps of the YouTube video, so you can click on them for more information.

Getting started: tools you will need

To begin, from a tooling perspective, we'll need to have a development store. They're free to make and sign up for using a Shopify Partner account. You’ll also need a private app on a development store which we'll walk through setting up, as well as your favorite code editor. In my case, I'll be using VS Code, but you can use whatever you like.

If you want to follow along exactly with what we're doing, I'll be using Ruby with the Sinatra framework. But you can feel free to use whatever programming language you like.

At the end of the series, we'll actually be putting together a complete end-to-end private app on Shopify that tries to make some API calls, gets rate limited, and then deals with it.

If you're interested in this kind of content, make sure to subscribe to our ShopifyDevs YouTube channel, and turn on your notifications so you know when we put a new video.

Let’s dive in.

You might also like: How to Build a Shopify App in One Week.

Introducing the API rate limit

All right, first things first. What is an API rate limit?

For that, we're going to jump in some documentation.

An API rate limit is essentially a way for Shopify to ensure stability of the platform. We have a super flexible API offering that's available in both REST, which is the web standard, and GraphQL, innovative and new. If there wasn't a rate limit in place, then people could effectively make as many API calls as they wanted, at any moment in time.

"An API rate limit is essentially a way for Shopify to ensure stability of the platform."

At surface value that might seem pretty good. Why would you want to in any way be restricted when it comes to managing data on the Shopify platform?

The issue with that thought process is that it doesn't do well at scale. If any developer has the opportunity to access the Shopify API and can just begin making an unlimited number of API requests, that's a huge strain on the Shopify servers. Ultimately, it could lead to downtime.

Downtime can impact our overall servers, which means merchants can’t access their stores and your apps won’t work. So, an API rate limit is a way for us to control the number of requests any given app can make on the platform. It ensures that you are making API calls efficiently and that when you do make an API call, you get a response.

Our servers stay up and response times are fast because, over the entire platform, we're able to maintain a stable level of requests.

Build for the world’s entrepreneurs

Want to check out the other videos in this series before they are posted on the blog? Subscribe to the ShopifyDevs YouTube channel. Get development inspiration, useful tips, and practical takeaways.

SubscribeExploring the different types of rate limits

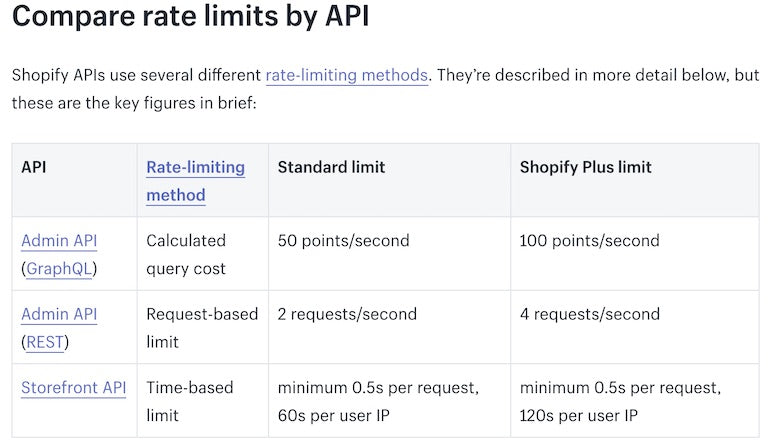

So let’s take a look at some specifics. At the foremost, it’s important to understand that there are different types of API rate limits.

The most common type of rate limit is probably the request-based limit, which is used by the REST Admin API. In a request-based limit, the rate limit is associated with the number of individual requests you're making.

As you can see in the comparison of rate limits by API chart above, we have one column that specifies the standard limit for standard Shopify plans. For Shopify Plus merchants, those numbers are effectively doubled.

I'll try to speak in standard terms in most cases.

The REST admin API: a request-based rate limit system

With the Shopify REST admin API, you get two requests per second. That limit is easy to keep track, and allows you to get a sense of how many overall requests you can make over the course of a minute, the course of an hour, or even the course of a day.

With this approach, it doesn’t matter what kind of request you’re making. Every single request, whether it’s the same as a previous request, whether you are updating something, deleting something, or just getting data; it’s two requests per second.

The GraphQL admin API: a points-based rate limit system

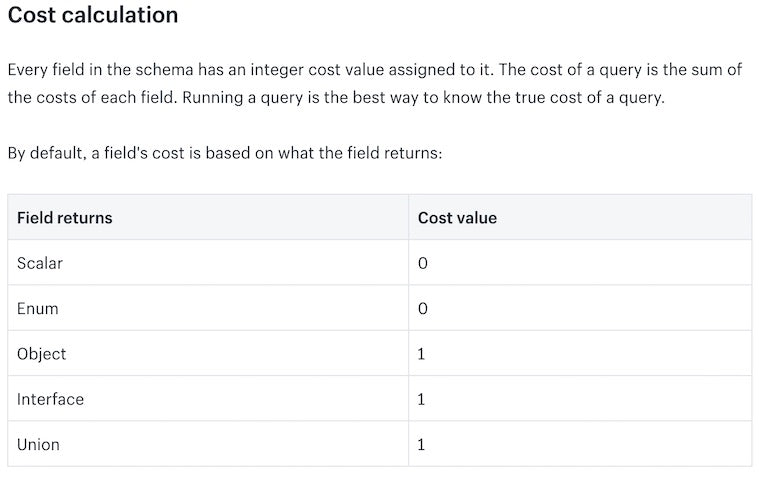

GraphQL takes request limits to the next level, using what we call a calculated query cost. This approach still gives you the ability to read and update data via queries and mutations, but the main difference is that we take into account the complexity associated with each of those requests—since some things, like updating data, are more resource-intensive than simply fetching it.

When it comes to any GraphQL request, we consider your rate limit in a points system. In a standard plan, you're allotted 50 points every second. The way the points work is really simple. A request costs 10 points for any mutation that'll require you to update, create, or delete data Fetching an object, on the other hand, only costs one point.

Now, GraphQL is a little bit interesting. We won't deep dive too much in this particular video, but it's possible to nest data within GraphQL.

Let's say that you wanted an order, but you wanted every single line item from that order as well. That's not going to just cost one point. If your order has ten line items, each of those line items will cost one point and the order itself will cost one point, so we'll be looking at 11 points for that request.

That's roughly how it works, and it's pretty easy to calculate yourself when you're taking a look at the GraphQL API.

In most cases, GraphQL is just more efficient. So if you're on the fence right now about what to use, we strongly recommend GraphQL for that reason (and for a couple more that we'll mention later on in the series).

Build apps for Shopify merchants

Whether you want to build apps for the Shopify App Store, offer custom app development services, or are looking for ways to grow your user base, the Shopify Partner Program will set you up for success. Join for free and access educational resources, developer preview environments, and recurring revenue share opportunities.

Sign upThe Storefront API: a time-based rate limit system

The last method of API rate limits is a time-based limit as used on the Storefront API. This approach isn’t about the number of requests you're making, but more so the amount of time between those requests.

This is used for our headless implementations, so we won't spend too much time on it in this article, but it’s worth understanding the difference.

The leaky bucket algorithm

The second main piece when it comes to understanding rate limits on Shopify is understanding the leaky bucket algorithm.

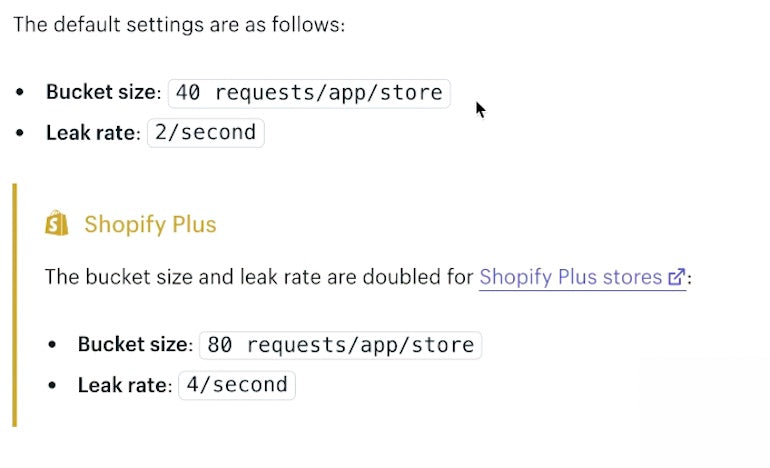

The best way to think about the leaky bucket algorithm is to think about a bucket that holds a predetermined number of marbles. One marble a second leaks out, which means you can add another marble at that same rate. If your bucket gets too full, you can’t add any more marbles. How many marbles your bucket can hold depends on a couple of things.

A standard plan using the REST Admin API has a bucket size of 40 requests per app, per store.

That's to make explicit that if you have a public app, and it's installed on two stores, there's no relationship or dependency between those two installations. Each of them have their own independent rate limits. In the case of the REST Admin API, they're allotted a bucket size of 40 requests—so you have the room to make 40 requests from your private app or your public app.

"If you have a public app, and it's installed on two stores, there's no relationship or dependency between those two installations. Each of them have their own independent rate limits."

Let's say that we wanted to create a new product. Awesome. Like we mentioned earlier, everything in REST is straightforward. Every request counts as one towards your rate limit.

So we create a product, and we throw a marble into the bucket. The second time we want to do something, like updating or deleting data, we'll toss another marble into the bucket.

You can see how over time, this bucket is slowly going to become full. And you can only make requests when you have room to throw another marble into the bucket. If it's full, and you try to throw a marble in, Shopify is going to respond with a 429 error: "Too many requests." "You've hit your rate limit." "We can't process your request.” You're going to have to wait.

That's where the leaky part comes in.

You do have this bucket of 40 requests. However, at the same time, it's constantly draining previous requests.

You might also like: Getting Started with GraphQL.

What does that mean?

That means that every second with a leak rate of two per second, your bucket gets room for two more marbles or two more requests, assuming there's anything in it at all. If it's just completely empty, you haven't made requests over the last hour, the bucket size is still 40. You're not going to gain any additional room.

You can't leak out anything that isn't there.

If you do make requests, let's say you make ten requests all within the same second, then you have filled up 10 out of 40 marbles in your bucket. One second later two of them will go. Your bucket now has eight marbles and has room for 32 more, or for 32 more requests.

Why the leaky bucket algorithm?

The reason we implemented this really straightforward approach is that if we just gave a standard rate limit of two calls per second, you wouldn't have the flexibility to do a burst of requests.

Let's say your application works like this: an order comes in, and every time, you update all the products that are included in that order, and you increment a value that's stored in their tags (perhaps to keep track of how many products you’ve sold in total).

Well, every order is going to have X number of product updates associated with it.

You can quickly see how the more orders that come in, the more requests you'll have to make.

With the leaky bucket method, because you have a bucket size of 40, if an order of 20 line items comes in, you can make 20 different requests to each of those products all at once.

"The ability to make a burst or a group of requests all at once gives you and your app a little bit more flexibility."

Even though the leak rate is two calls per second, you'll have room in your bucket because you'll have 20 marbles going in, and one second later, two leaking out, which leaves you with only 18. You can see slowly how the ability to make a burst or a group of requests all at once gives you and your app a little bit more flexibility.

And that's why we've chosen to implement it that way.

When it comes to GraphQL, it’s very similar. You are also given a bucket; the bucket just happens to have a different size.

In this case, the bucket is given a thousand cost points. And the leak rate is 50 per second. Again, mutations and objects vary in cost.

We have more definitions in the documentation of how much mutations and queries cost.

This information will help you get an understanding of how much or how many requests you can make over a period of time using GraphQL.

That finishes our introduction to rate limits. The different methods that we have, with the different types of requests, and why the leaky bucket algorithm exists for platform stability.

Build for the world’s entrepreneurs

Want to check out the other videos in this series before they are posted on the blog? Subscribe to the ShopifyDevs YouTube channel. Get development inspiration, useful tips, and practical takeaways.

SubscribeThe leaky bucket algorithm: additional data to consider

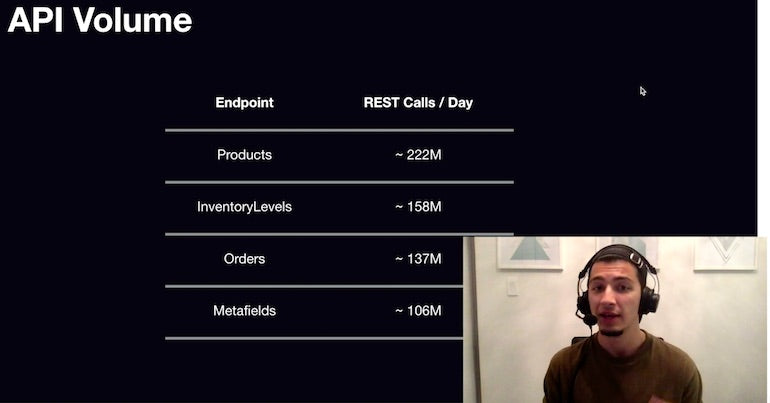

The last thing I want to share is some fun data because I love data. It’s our API volume. This is just one day that I've taken arbitrarily from a previous dataset over the last 30 days or so.

In one day you can see across 4 of our most popular REST endpoints, how much volume we're talking about when it comes to API requests.

Products sees 222 unique API calls per day, InventoryLevels sees 158 million, Orders sees 137 million, and metafields see 106 million.

There's a significant amount of traffic that goes through the Shopify servers. We implement rate limits because it's the responsible thing to do, and because it means we can offer the highest level of stability and uptime for our merchants and our partners.

We appreciate developers building applications responsibly to work within those rate limits. In our next article of this series, we’ll talk a bit more about rate limit best practices.

Stay tuned for the next video in this series on our ShopifyDevs YouTube channel, where we'll be looking at how to actually build your code to respect these rate limits.